|

The autonomous vehicle—a vehicle capable of driving itself without any human intervention—is quickly becoming a reality. True driverless vehicles are able to "think" like a human driver, and possess the ability to make decisions in real-time, including obeying traffic laws, avoiding obstacles and other vehicles, as well as planning and navigating traffic routes.

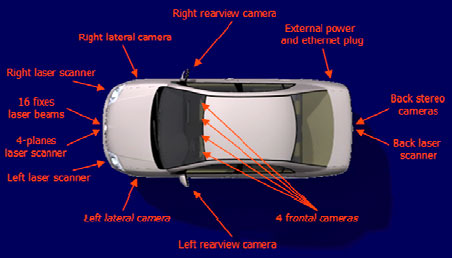

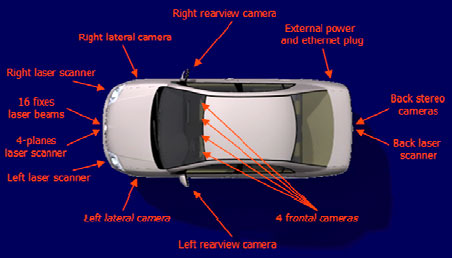

The researchers at VisLab, a spin-off company of the University in Parma, Italy that specializes in vehicular applications involving both environmental perception and intelligent control, developed a prototype vehicle called BRAiVE (short for BRAin-drIVE). The off-the-shelf car is equipped with various sensing technologies for perception, navigation and control. The perception system is mainly based on vision, together with four laser scanners, sixteen laser beams, GPS, an Inertial Measurement Unit (IMU) and full X-by-wire for autonomous driving. Ten cameras are used to detect information on the surroundings of the vehicle. The images are processed in real-time, together with information from the navigation system, to produce the necessary signals for steering and gas intake.

Fig1

Front Detection

FIGURE 1. The cameras assist in front obstacle detection, intersection estimation, parking space detection, blind spot monitoring and rear obstacle detection

Four Point Grey Dragonfly2's are mounted behind the upper part of the windshield, two with color sensors and two with mono sensors. These cameras are used for forward obstacle/vehicle detection, lane detection and traffic sign recognition. Two Dragonfly2’s are mounted over the front mudguards, behind the body of the car looking sideways, and are used for parking and traffic intersection detection. An additional two Firefly MV’s are integrated into the rearview mirror to detect overtaking vehicles, and another two Dragonfly2’s monitor nearby obstacles during driving.

Images are acquired from the cameras via external trigger or in free-running mode, depending on the situation, using Format 7 Mode 0 (region of interest mode). The raw Bayer data is color processed on-board the cameras, then streamed at S400 speed over the FireWire interface to the vision system. Stereo algorithms are then used to reconstruct the 3D environment, and provide information about the immediate surroundings.

“We selected Point Grey cameras for a number of reasons, most notably their outstanding image quality, the ability to control parameters such as shutter, gain, and white balance with custom algorithms, and the compatibility with ultra-compact M12 microlens mounts, which have played a key role,” explains Dr. Alberto Broggi, Director of VisLab. Paolo Grisleri, responsible for vehicle integration, adds, “The camera size for integration in the rearview mirrors, availability of third-party software for Linux, as well as good price/performance were other significant factors in our decision.”

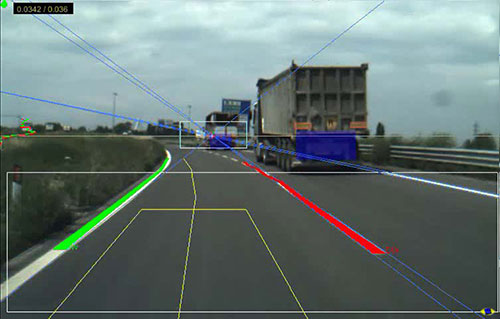

Fig

2 Cameras and Lasers

FIGURE 2. The vehicle uses Dragonfly2s and Firefly MVs

The intelligent capabilities BRAiVE features include crossing assistance, obstacle and pedestrian detection, parking assistance, road sign detection, and lane markings, as well as stop & go and automatic cruise control. All sensors, actuation and control devices are perfectly integrated, giving passengers the feeling of riding in a normal car. BRAiVE was first presented to the press in a public demonstration on April 3rd, 2009 in Parma, Italy and presented at the 2009 IEEE Intelligent Vehicles Symposium in Xi’an, China.

“Artificial vision is a promising technology for cars, trucks, road construction, mining vehicles and indeed military vehicles, thanks to the low cost and great capabilities that vision sensors are currently demonstrating,” adds Paolo Grisleri. He is confident that the technology embedded in this vehicle will constitute the basis for developing innovative concepts for the car industry and full vehicle autonomy in the next few years.

LINKS

& REFERENCE

http://www.nap.edu/catalog/11379.html

http://www.ptgrey.com/news/casestudies/details.asp?articleID=342

ACRONYMS

& ABBREVIATIONS A-Z

ACOMMS acoustic communications systems

ACTD Advanced Concept Technology Demonstration

AEHF advanced extremely high frequency

AGV automated guided vehicle

AINS Autonomous Intelligent Network and Systems (initiative)

ANS Autonomous Navigation System

ARPA Advanced Research Projects Agency

ARTS All Purpose Remote Transport System

ATD Advanced Technology Demonstration

AV autonomous vehicl

C2 command and control

C2S Command and Control System

C2V command and control vehicle

C3 command, control, and communications

C3I command, control, communications, and intelligence

C4 command, control, communications, and computers

C4I command, control, communications, computers, and intelligence

CDL common data link

CECOM Communications Electronics Command

CHBDL common high-bandwidth data link

COMINT communications intelligence

CONOPS concept(s) of operations

COTS commercial off-the-shelf

CSP constraint satisfaction problem

CTFF Cell Transfer Frame Format

CWSP Commercial Wideband Satellite Program

DARPA Defense Advanced Research Projects Agency

DOT Department of Transport

EHF extremely high frequency

ELINT electronic intelligence

EM/EO electromagnetic/electro-optical

EMS electromagnetic sensing

EO/IR electro-optical/infrared

FDI fault detection and isolation

FDOA frequency difference of arrival

FMEA failure modes and effects analysis

FOC final operational capability

Gbps gigabits per second

GBS Global Broadcast System

GEOS Geosynchronous Earth Orbit Satellite

GHz gigahertz

GIG Global Information Grid

GIG-BE Global Information Grid-Bandwidth Expansion

GIG-E Globat Information Grid-Expansion

GPS Global Positioning System

HAE high-altitude and -endurance

HALE high-altitude, long-endurance

HDTV high-definition television

HMMWV high mobility multipurpose wheeled vehicle

IMINT imagery intelligence

IMU inertial measurement unit

IOC initial operating capability

IP Internet Protocol

IR infrared

JPO Joint Program Office

JSTARS Joint Surveillance Target Attack Radar System

kbps kilobits per second

LADAR laser detection and ranging

L/D lift to drag (ratio)

LD MRUUV large-diameter multi-reconfigurable UUV

LDR low data rate

LEOS Low Earth Orbiting Satellite

LIDAR light detection and ranging

LOA level of autonomy

LOS line of sight

LRIP low-rate initial production

MAE medium-altitude and -endurance

MARS Mobile Autonomous Robot Software

MCG&I mapping, charting, geodesy,and imagery

MDARS-E/I Mobile Detection Assessment Response System-Exterior/Interior

MDR medium data rate

MICA Mixed Initiative Control of Automa-teams (program)

MMS Mission Management System

MPM mission payload module

MP-RTIP Multi-Platform Radar Technology Insertion Program

MRD Maritime Reconnaissance Demonstration (program)

MRUUV multi-reconfigurable UUV

MTI moving target indicator

MULE multifunction utility logistics equipment (vehicle)

NBC nuclear, biological, and chemical

NII Networks and Information Integration

NMRS Near-term Mine Reconnaissance System

NRC National Research Council

NRO National Reconnaissance Office

O&S operations and support

OCU operator control unit

ODIS Omni-Directional Inspection System

OODA observe-orient-decide-act

OTH over-the-horizon

PEO Program Executive Office

Perceptor Perception for Offroad Robotics

PMS Program Management Office

QDR Quadrennial Defense Review

R&D research and development

REMUS Remote Environmental Monitoring Unit System

RF radio frequency

RHIB rigid hull inflatable boat

RMP Radar Modernization Program

RONS Remote Ordnance Neutralization System

ROV remotely operated vehicle

RTIP Radar Technology Improvement Program

S&T science and technology

SA situation awareness

SAR synthetic aperture radar

SAS synthetic aperture sonar

SATCOM satellite communications

SDD System Development and Demonstration

SDR Software for Distributed Robotics

SFC specific fuel consumption

SHF superhigh frequency

SIGINT signal intelligence

SIPRNET Secret Internet Protocol Router Network

SLAM simultaneous localization and mapping

SOC Special Operations-Capable

SRS Standardized Robotics System

TALON one robot solution to a variety of mission requirements

TAR tactical autonomous robot

TCA Transformational Communications Architecture

TCDL tactical common data link

TCO Transformational Communications Office

TCP Transmission Control Protocol

TCS Tactical Control System

TDOA time difference of arrival

TPED task, process, exploit, disseminate

TPPU task, post, process, use

TRL Technology Readiness Level

TUAV tactical unmanned aerial vehicle

UCS Unmanned Control System

UGCV Unmanned Ground Combat Vehicle (program)

UGS unattended ground sensor

UGV unmanned ground vehicle

UHF ultrahigh frequency

VHF very high frequency

VMS Vehicle Management System

WNW wideband network wave form

XUV experimental unmanned vehicle

The

key to accurate hydrographic mapping is continuous monitoring,

for which the SNAV

platform, presently under development, is a robotic ocean workhorse. Based on a stable

SWASH

hull this design is under development by a consortium of British

engineers. The robot

ship uses no diesel fuel to monitor the oceans autonomously (COLREGS

compliant) at relatively high

speed 24/7 and 365 days a year - only possible with the revolutionary (patent) energy harvesting system. The

hullform is ideal for automatic release and recovery of ROVs

or towed arrays, alternating between drone and fully autonomous modes.

UK and international development partners are welcome. This vessel

pays for itself in fuel saved every ten years.

|