|

Pictured: The Embry-Riddle team (from left): Hitesh V. Patel (Research Associate), Christopher Kennedy (Research Associate), Tim Zuercher (Research Associate)

Navigating a floating obstacle course with aplomb, an autonomous LiDAR-equipped boat from Embry-Riddle Aeronautical University captured first place overall at the 7th Annual International RoboBoat Competition in Virginia Beach, Virginia.

Outfitted with Velodyne’s HDL-32E LiDAR sensor, the pilot-less Embry-Riddle vessel crossed the finish line ahead of the 12 other teams participating in the event, which was sponsored by the Office of Naval Research, the AUVSI (Association for Unmanned Vehicle Systems International) Foundation and several industry partners. The race is the precursor to the Maritime RobotX Challenge, a major AUVSI competition in Singapore.

Central to the competition was the successful navigation of an obstacle field. The Embry-Riddle team mounted the sensor on its boat and was able to navigate through the obstacle course with precision, well ahead of the other competitors. The HDL-32E from Velodyne’s LiDAR Division is part of a growing family of solutions built around the company’s Light Detection and Ranging technology.

“Our performance in the RoboBoat competition was made possible by the amazing Velodyne sensor, which provided us the necessary edge over the other teams,” said Hitesh V. Patel, Research Associate at Embry-Riddle. “Our win in Virginia Beach has paved the way for the RobotX team in Singapore. The Velodyne HDL-32E is such an impressive sensor, and we’re delighted at the initial outcome of this partnership.”

“Our 3D LiDAR sensors guide autonomous vehicles on land, on the seas and in air, and our hats are off to Embry-Riddle University,” said Wolfgang Juchmann, Director of Sales & Marketing, Velodyne LiDAR division. “We’re recognized worldwide for developing real-time LiDAR sensors for all kinds of autonomous applications, including 3D mapping and surveillance."

"With a continuous 360-degree sweep of its environment, our sensors capture data at a rate of 1.3 million points per second, within a range of 100 meters – ideal for taking on obstacle courses, wherever they may be. Still, it’s up to users to implement the sensor and adapt it to their specific environment. That’s why Embry-Riddle deserves such kudos, and why we’re so looking forward to the Maritime RobotX competition in Singapore later this summer.”

RESULTS

- Congratulations to the 2014 RoboBoat Winners:

1st Embry-Riddle Aeronautical University $6,000

2nd University of Florida $5,000

3rd Robotics Club at UCF $3,000

4th Georgia Institute of Technology $2,500

Judges Special Awards:

Innovation Award - Color Normalization Strategy Georgia Institute of Technology $1,000

Innovation Award - Rotating, LIDAR System University of Central Florida $500

Innovation Award - Hull Form and Propulsion Design University of Florida $500

Biggest Bang for the Buck Cedarville University $1,000

ABOUT

EMBRY RIDDLE UNIVERSITY

Embry–Riddle Aeronautical University (also known as Embry-Riddle or ERAU) is a non-profit private university in the United States, with residential campuses in Daytona Beach, Florida and Prescott, Arizona, and a "Worldwide Campus" comprising global learning locations with five modalities of education delivery, and online delivery distance learning designed for students from high school graduate level up. Called "the Harvard of the sky" in the subtitle of an article in Time Magazine in 1979, Embry–Riddle's foundations go back to the early years of flight, and the University now awards associate's, bachelor's, master's, and doctorate degrees in various disciplines, including aviation, aerospace engineering, business, and science.

The university is accredited by the Southern Association of Colleges and Schools to award degrees at both residential campuses as well as through Embry-Riddle Worldwide at the associate, bachelor, master, and doctoral

levels. The engineering programs are fully recognized by the Accreditation Board for Engineering and Technology (ABET). Programs in Aviation Maintenance, Air Traffic Management, Applied Meteorology, Aeronautical Science, Aerospace & Occupational Safety, Flight Operations, and Airport Management are all accredited by the Aviation Accreditation Board International (AABI). The bachelor amd masters degree programs in business at worldwide and campus are accredited by the Association of Collegiate Business Schools and Programs

(ACBSP). The programs in Aeronautics, Air Traffic Management, Applied Meteorology, and Aerospace Studies are certified by the Federal Aviation Administration (FAA).

MARITIME

APPLICATIONS - SENSORS

Similar to ground vehicle

autonomy (see below), maritime autonomy requires a blend of mission and route planning, precise craft

localization, obstacle detection and avoidance, assembly, analysis and processing of all sensor inputs, and control functions.

The required sensor(s) must enable detection and identification of obstacles in the water at a sufficient range to perform an

evasive maneuver if necessary. The ability to quickly classify the object enables the control system to decide on the proper course of action.

If an obstacle is moving in a trajectory that avoids intersection with the vessel, no

course action may be required. If an object is detected only at short range, there may be insufficient time to alter

course, hence, sensors of sufficient range and fidelity are essential.

Using GPS alone for craft localization

has proven to be unreliable. A high resolution sensor can be of use in determining the position of known landmarks such as a coastline, buoys, or other predetermined objects and

confirm craft coordinates from them. Images captured from onboard sensors need stabilization through an IMU or other means. A high resolution sensor with sufficient

frame rate can be used to ascertain vessel pitch roll and yaw from observable

objects, stand alone, or in combination with roll detection (digital

clinometers).

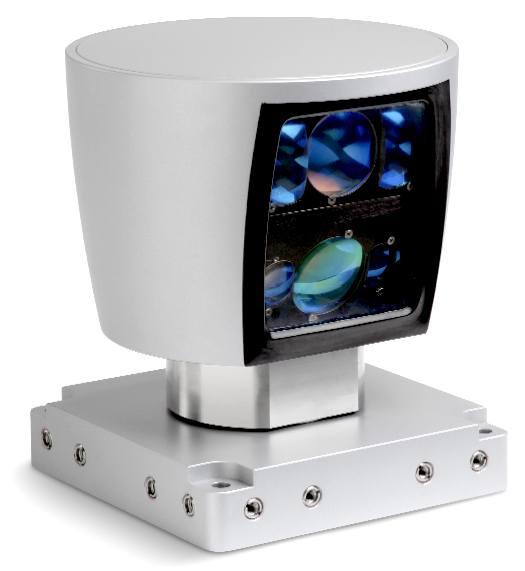

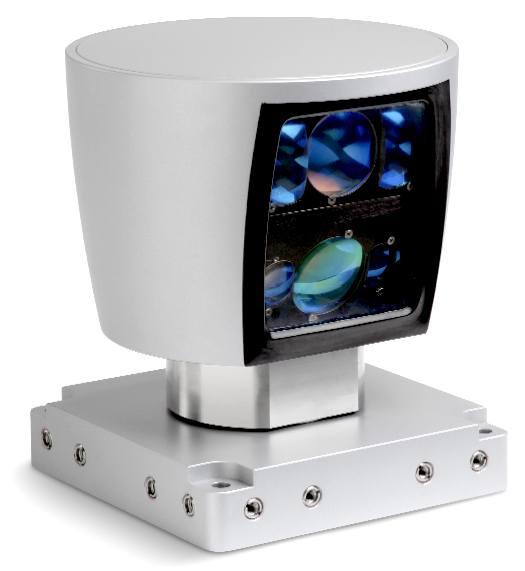

HDL-64E

SPECIFICATION (MARINE)

The Velodyne HDL-64E LIDAR

contains 64 lasers spread over a 27-degree vertical field, rotating 360 degrees at a rate from 5-15

Hz. This builds up a rich and detailed point cloud of the environment. Each laser is a 905 nm IR laser diode

that emits a short pulse that reflects off an object or is absorbed by,

for example, unchurned water.

The sensor reports the distance to the object

along with the intensity of that return. Over 1.3 million data points each second are transmitted over the Ethernet interface.

The HDL-64E's lasers each emit an optical pulse that is five nanoseconds in

duration in conjunction with a focusing (target) lens. When the light strikes

a target, a portion of the light reflects towards the source. This return light passes through a separate

( receiver) lens and a UV sunlight filter. The receiver lens focuses the return light on an Avalanche Photodiode (APD), which then generates an output signal relative to the

strength of the received optical signal.

The laser and APD are precisely aligned at the Velodyne factory to provide maximum sensitivity

while minimizing signal cross-talk, thus forming the emitter-detector

(matched) pair. The return signal is digitized at over 3 GHz and analyzed via DSP for multiple returns, and intensity of each return. Output is reported in 100 mbps UDP Ethernet packets.

Finally, the HDL-64E is weatherproofed for maritime use. With an IP67 rating and its rotating nature, casual water does not pose a risk for sensor operation. The 64E uses

a stainless steel housing to cope with salt water environments.

Velodyne's

HDL-64E and HDL-32E lidar navigation sensors

VELODYNE

LIDAR

Velodyne's expertise with laser distance measurement started by participating in the 2005 Grand Challenge sponsored by the Defense Advanced Research Projects Agency

(DARPA). A race for autonomous vehicles across the Mojave desert,

DARPA's goal was to stimulate autonomous vehicle technology development for both military and commercial applications.

Velodyne founders Dave and Bruce Hall entered the competition as Team DAD (Digital Audio Drive), traveling 6.2 miles in the first event and 25 miles in the second. The team developed technology for visualizing the environment, first using a dual video camera approach and later developing the laser-based system that laid the foundation for Velodyne's current products.

The first Velodyne LIDAR scanner was about 30 inches in diameter and weighed close to 100 lbs. Choosing to commercialize the LIDAR scanner instead of competing in subsequent challenge events, Velodyne was able to dramatically reduce the sensor's size and weight while also improving performance.

Velodyne's HDL-64E sensor was the primary means of terrain map construction and obstacle detection for all the top DARPA Urban Challenge teams in 2007 and used by five out of six of the finishing teams, including the winning and second place teams. In fact, some teams relied exclusively on the HDL-64E for the information about the environment used to navigate an autonomous vehicle through a simulated urban environment.

VELODYNE

MILESTONES

1983 - Velodyne Acoustics formed when David Hall patents servo control for loudspeakers.

1995 - David Hall patents Class D 97% efficient amplifier. Velodyne subwoofers are recognized worldwide as best-in-class.

2000 - 2001 - Dave and Bruce Hall participate in BattleBots®, Robotica®, and Robot Wars® competitions.

2004 - Team Digital Auto Drive (DAD) competes in the first DARPA Grand Challenge using stereo-vision technology. The vehicle survives 6.0 miles, finishing third.

2005 - Team DAD enters the second Grand Challenge using 64-element LIDAR technology. A steering control board failure ends their race at 25 miles, finishing 11th of all finishers. Five vehicles complete the race, with Stanford Racing team winning the event.

2006 - Velodyne offers a more compact version of its 64 element LIDAR scanner - the HDL-64E - for sale. Top Grand Challenge teams immediately place orders. Team DAD declines to enter the next DARPA Urban Challenge event, focusing instead on supplying LIDAR scanners to all top teams.

2007 - The HDL-64E was used by five out of six of the finishing teams at the 2007 DARPA Urban Challenge, including the winning and second place teams.

2008 - The HDL-64E S2 Series 2 sensor is introduced.

2010 - Velodyne launches the HDL-32E.

VELODYNE

DOWNLOADS

Datasheets

- HDL-32E

- HDL-64E

Articles

Mapping the World in 3D

Mobile Mapping and Data Collection

LIDAR in the Driver's Seat

Cars that drive themselves

White Papers

Static Calibration and Analysis of the Velodyne HDL-64E S2 for High Accuracy Mobile Scanning

A High Definition Lidar Sensor for 3-D Applications

Heavy Vehicle Sensing Strategies

Maritime Applications of LIDAR Sensors for Obstacle Avoidance and Navigation

Manual - HDL-32E

- HDL-64E

Software / Firmware

VeloView (current visualization software for HDL)

Sample Data for VeloView (HDL-32E)

DSR Viewer (previous visualization software for HDL)

HDL-32E Firmware (most recent

version: 2.1.7.0)

HDL-32E Firmware (previous

version: 2.0.6.0)

HDL-32E Firmware Release notes

HDL-32E Firmware Description and Upload Procedure

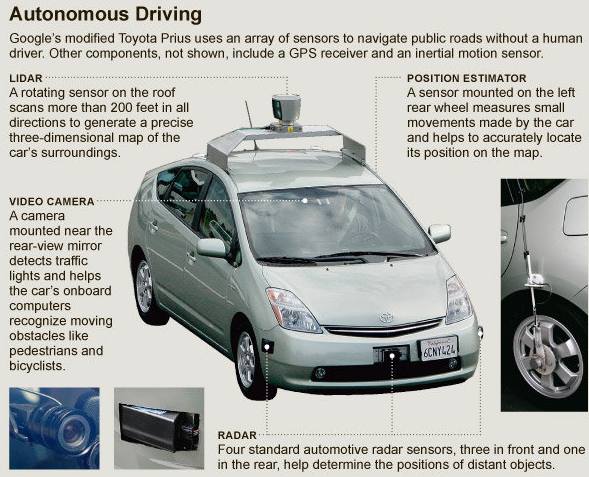

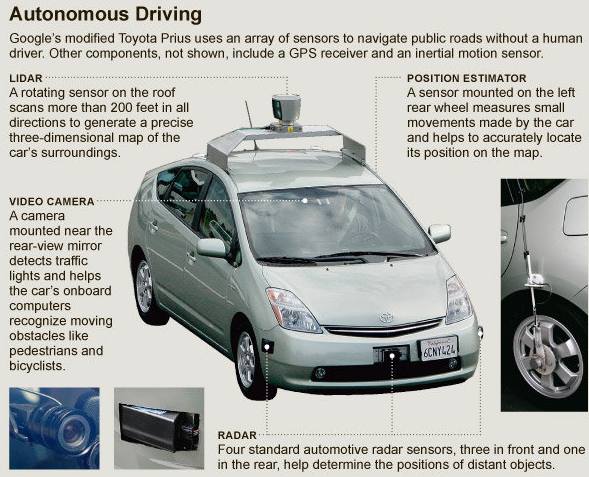

The

HDL-64E equipped Toyota Prius on a Californian highway

NEW

YORK TIMES GOOGLE

AUTONOMOUS CAR - TOYOTA PRIUS - OCTOBER 2010

MOUNTAIN VIEW, Calif. — Anyone driving the twists of Highway 1 between San Francisco and Los Angeles recently may have glimpsed a Toyota Prius with a curious funnel-like cylinder on the roof. Harder to notice was that the person at the wheel was not actually driving.

The car is a project of Google, which has been working in secret but in plain view on vehicles that can drive themselves, using artificial-intelligence software that can sense anything near the car and mimic the decisions made by a human driver.

With someone behind the wheel to take control if something goes awry and a technician in the passenger seat to monitor the navigation system, seven test cars have driven 1,000 miles without human intervention and more than 140,000 miles with only occasional human control. One even drove itself down Lombard Street in San Francisco, one of the steepest and curviest streets in the nation. The only accident, engineers said, was when one Google car was rear-ended while stopped at a traffic light.

Autonomous cars are years from mass production, but technologists who have long dreamed of them believe that they can transform society as profoundly as the Internet has.

Robot drivers react faster than humans, have 360-degree perception and do not get distracted, sleepy or intoxicated, the engineers argue. They speak in terms of lives saved and injuries avoided — more than 37,000 people died in car accidents in the United States in 2008. The engineers say the technology could double the capacity of roads by allowing cars to drive more safely while closer together. Because the robot cars would eventually be less likely to crash, they could be built lighter, reducing fuel consumption. But of course, to be truly safer, the cars must be far more reliable than, say, today’s personal computers, which crash on occasion and are frequently infected.

The Google research program using artificial intelligence to revolutionize the automobile is proof that the company’s ambitions reach beyond the search engine business. The program is also a departure from the mainstream of innovation in Silicon Valley, which has veered toward social networks and Hollywood-style digital media.

During a half-hour drive beginning on Google’s campus 35 miles south of San Francisco last Wednesday, a Prius equipped with a variety of sensors and following a route programmed into the GPS navigation system nimbly accelerated in the entrance lane and merged into fast-moving traffic on Highway 101, the freeway through Silicon Valley.

It drove at the speed limit, which it knew because the limit for every road is included in its database, and left the freeway several exits later. The device atop the car produced a detailed map of the environment.

The car then drove in city traffic through Mountain View, stopping for lights and stop signs, as well as making announcements like “approaching a crosswalk” (to warn the human at the wheel) or “turn ahead” in a pleasant female voice. This same pleasant voice would, engineers said, alert the driver if a master control system detected anything amiss with the various sensors.

The car can be programmed for different driving personalities — from cautious, in which it is more likely to yield to another car, to aggressive, where it is more likely to go first.

Christopher Urmson, a Carnegie Mellon University robotics scientist, was behind the wheel but not using it. To gain control of the car he has to do one of three things: hit a red button near his right hand, touch the brake or turn the steering wheel. He did so twice, once when a bicyclist ran a red light and again when a car in front stopped and began to back into a parking space. But the car seemed likely to have prevented an accident itself.

When he returned to automated “cruise” mode, the car gave a little “whir” meant to evoke going into warp drive on “Star Trek,” and Dr. Urmson was able to rest his hands by his sides or gesticulate when talking to a passenger in the back seat. He said the cars did attract attention, but people seem to think they are just the next generation of the Street View cars that Google uses to take photographs and collect data for its maps.

The project is the brainchild of Sebastian Thrun, the 43-year-old director of the Stanford Artificial Intelligence Laboratory, a Google engineer and the co-inventor of the Street View mapping service.

In 2005, he led a team of Stanford students and faculty members in designing the Stanley robot car, winning the second Grand Challenge of the Defense Advanced Research Projects Agency, a $2 million Pentagon prize for driving autonomously over 132 miles in the desert.

Besides the team of 15 engineers working on the current project, Google hired more than a dozen people, each with a spotless driving record, to sit in the driver’s seat, paying $15 an hour or more. Google is using six Priuses and an Audi TT in the project.

The Google researchers said the company did not yet have a clear plan to create a business from the experiments. Dr. Thrun is known as a passionate promoter of the potential to use robotic vehicles to make highways safer and lower the nation’s energy costs. It is a commitment shared by Larry Page, Google’s co-founder, according to several people familiar with the project.

EMBRY

RIDDLE CONTACTS

Worldwide Campus

Embry-Riddle Aeronautical University

600 S. Clyde Morris Boulevard

Daytona Beach, FL 32114-3900

Call: 800-522-6787

Email: Worldwide@erau.edu

Daytona Beach, FL Campus

Embry-Riddle Aeronautical University

600 S. Clyde Morris Boulevard

Daytona Beach, FL 32114-3900

Call: 800-222-3728 or 386-226-6000

Email: DaytonaBeach@erau.edu

University & Administration

Embry-Riddle Aeronautical University

600 S. Clyde Morris Boulevard

Daytona Beach, FL 32114-3900

Call: 386-226-6000

Email: DaytonaBeach@erau.edu

Prescott, AZ Campus

Embry-Riddle Aeronautical University

3700 Willow Creek Road

Prescott, AZ 86301-3720

Call: 800-888-3728 or 928-777-3728

Email: Prescott@erau.edu

VELODYNE

CONTACTS

Velodyne Acoustics, Inc.

LiDAR Division/Sales

345 Digital Drive

Morgan Hill, CA 95037

Headquarters: 408-465-2800

Sales & Service: 408-465-2859

e-mail: lidar@velodyne.com

LINKS

& REFERENCE

Marine

technology news roboboat competition Embry Liddle

Velodyne Lidar

Embry Riddle Aeronautics University

Wikipedia

Embry Riddle_Aeronautical_University

http://en.wikipedia.org/wiki/Embry%E2%80%93Riddle_Aeronautical_University

http://www.marinetechnologynews.com/news/roboboat-competition-embryliddle-496970

http://www.velodynelidar.com

http://www.erau.edu/

http://www.nytimes.com/2010/10/10/science/10google.html?_r=1

New

York Times 2010 google autonomous car

ASTM

Committee F41 on UUV Standards

Autonomous

Undersea Systems Institute

AUV

Lab at MIT Sea Grant

Florida

Atlantic U's Advanced Marine Systems Lab

Heriot-Watt

University (Scotland) Ocean Systems Lab

Institute

for Exploration

Marine

Advanced Technology Education (MATE) Center

Marine

Technology Society

Monterey

Bay Aquarium Research Institute

Passive

Sonar System for UUV Surveillance Tasks

Research

Submersibles and Undersea Technologies

SDiBotics

Woods

Hole Oceanographic Institute

U.S.

Navy's Naval Undersea Warfare Center UUV Programs

U.S.

Office of Naval Research

UV

Sentry Presentation

Automated

Imaging Association

Federation

of American Scientists

NASA

JPL's Robotics

National

Defense Industrial Association (NDIA)

Remote

Control Aerial Photography Association

Robotic

Industries Association

SACLANTCEN

Sandia

National Laboratories

Seattle

Robotics Society

Society

of Automotive Engineers

SPIE

U.S.

Department of Defense Science Board Report on Autonomy

Systems

Engineering for Autonomous Systems (SEAS) Defence Technology Centre (DTC)

Electro-Magnetic

Remote Sensing (EMRS) Defence Technology Centre (DTC)

|